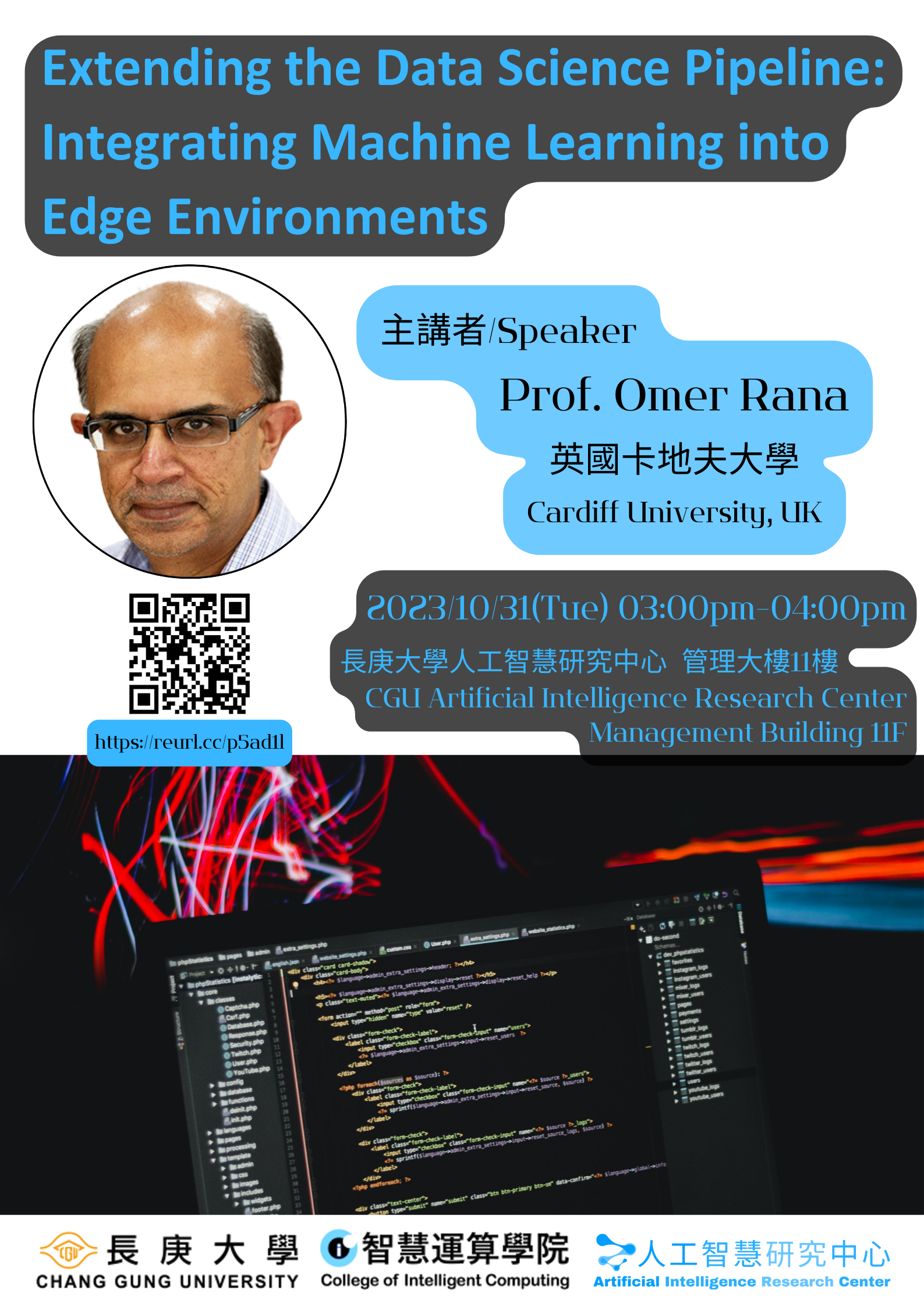

【演講公告】2023.10.31「Extending the Data Science pipeline: Integrating Machine Learning into Edge Environments」- 英國卡地夫大學物理科學及工程學院 Omer Rana 教授

講題:Extending the Data Science pipeline: Integrating Machine Learning into Edge Environments

講者:英國卡地夫大學 Omer Rana教授

時間:2023.10.31 (二) 15:00-16:00

地點:人工智慧研究中心(管理大樓11樓)

講者介紹:

Omer F. Rana is a Professor of Performance Engineering at Cardiff University, with research interests in high performance distributed computing, data analysis/mining and multi-agent systems. He is the Dean of International for the Physical Sciences and Engineering College. He holds a PhD in Parallel Computing & Neural Architectures from Imperial College, University of London, an MSC in Microelectronics (from the University of Southampton) and a BEng. in Information Systems Engineering (from Imperial College, University of London)

演講大綱:

Internet of Things (IoT) applications today involve data capture from sensors and devices that are close to the phenomenon being measured, with such data subsequently being transmitted to Cloud data centre for storage, analysis and visualisation. Currently devices used for data capture often differ from those that are used to subsequently carry out analysis on such data. Increasing availability of storage and processing devices closer to the data capture device, perhaps over a one-hop network connection or even directly connected to the IoT device itself, requires more efficient allocation of processing across such edge devices and data centres. Supporting machine learning & data analytics directly on edge devices also enables support for distributed (federated) learning, enabling user devices to be used directly in the inference or learning process. Scalability in this context needs to consider both cloud resources, data distribution and initial processing on edge resources closer to the user. This talk investigates how a data analytics pipeline can be deployed across the cloud-edge continuum. Understanding what should be executed at a data centre and what can be moved to an edge resource remains an important challenge -- especially with increasing capability of our edge devices. The following questions are addressed in this talk: 1. How do we partition machine learning algorithms across Edge-Network-Cloud resources (often referred to as the "Cloud-Edge Continuum") based on constraints such as privacy, capacity and resilience? 2. Can machine learning algorithms be adapted based on the characteristics of devices on which they are hosted? What does this mean for stability/ convergence vs. performance?

主辦單位:人工智慧研究中心、智慧運算學院

※本活動無需報名。